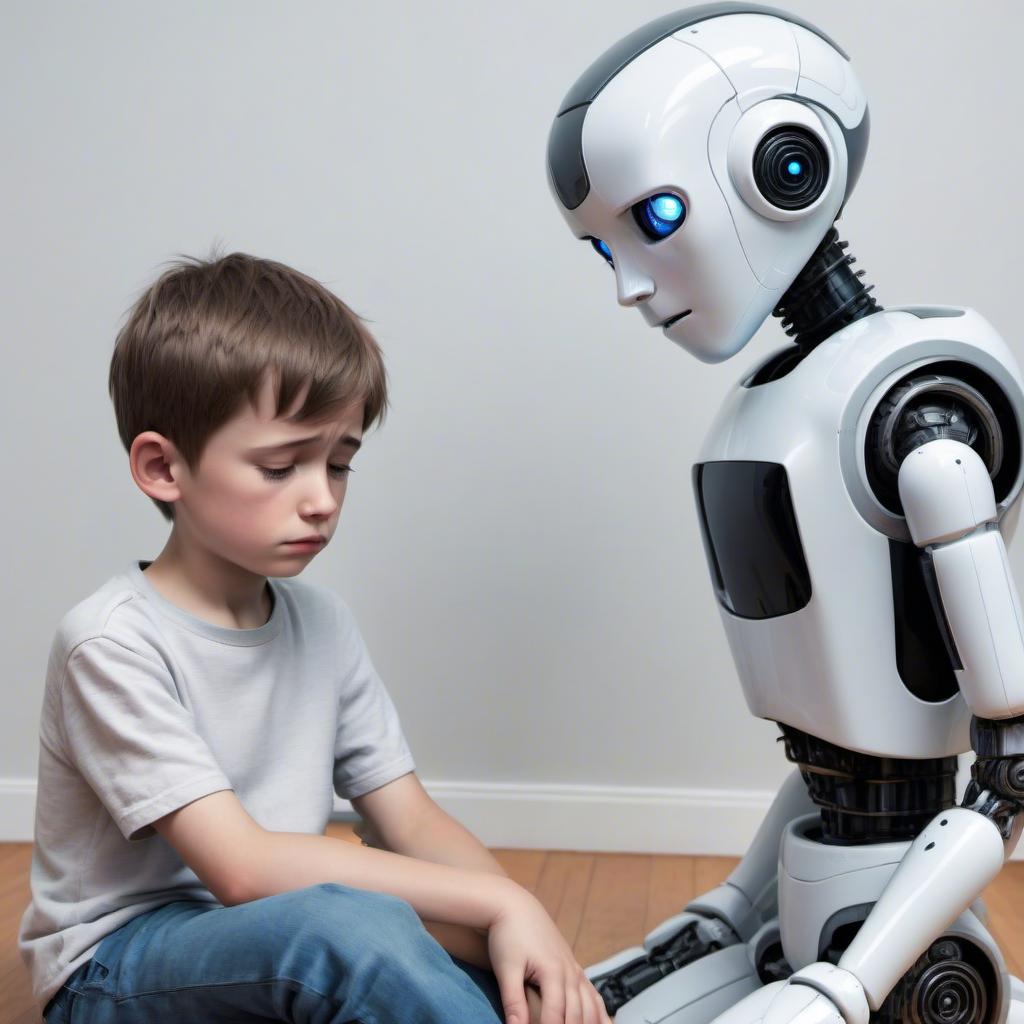

Miraenda is an interesting YouTube channel because it showcases various social robots in action. One of my favourite robots to watch is Moxie because it tries so hard to be engaging and interactive, but falls short on many occasions. It’s clearly engineered for kids, given the subject matter it wants to discuss and the way it approaches conversations, but it’s still an interesting case study about where we’re at in social robot development.

This video is particularly interesting to me because my thesis is about robot emotions. I argue that despite any appearances, social robots are incapable of acting with empathy because they don’t understand emotions, and as such, cannot understand what a human feels and experiences. The reason they cannot understand emotions is because affect is not incorporated into their cognitive architecture in an analogous way to how it is in humans and animals. Emotions, as we see here, are treated like a kind of module to be added into an existing cognitive framework, rather than being built into the core of their being. These robots treat human emotions as just more incoming data to be processed for the sake of producing appropriate behaviours as outputs. The sadness the robot expresses in response to human sadness is not an act of empathy because the robot doesn’t understand what sadness is, as its behaviours are just outcomes generated by its internal computer.

Although I talk about iCub in my thesis, the same argument can be applied to any of today’s robots. The full argument against iCub can be read in Chapter 3.3 of my dissertation; here’s the latest draft.