John Worrall’s paper “Structural realism: The best of both worlds?” mostly outlines the debate occurring within the Philosophy of Science surrounding whether scientific realism or anti-realism best captures our intuitions and intentions regarding the scientific process and its history. Although this topic on its own is a fascinating discussion, it will not the focus of this article. Instead, I’d like to explore Worrall’s reply and its implications when approached from a metaphysical perspective. At the end of his paper, Worrall concludes that combining an aspect of realism with an anti-realist attitude produces a potential solution to the dilemma. Structural realism describes a perspective which can account for the predictive success of science while also explaining how scientific revolutions changed theories and research practices throughout history (Worrall 123). Structural realists believe that when scientific theories undergo conceptual change, their form or structure remains constant while the content of a theory may be modified based on new empirical findings (Worrall 117). He believes this account is able to explain how scientific theories are able to undergo both growth and replacement over time (Worrall 120).

However, structural realism can be further divided into two different categories which support differing views. The epistemic structural realist (ESR) believes all we can learn through scientific inquiry is the accuracy of a theory’s inherent structure, and not the concepts or entities themselves (Ladyman SEP). The more extreme version, ontic structural realism (OSR), states that there are no objects or things, and the universe is only comprised of structures, forms, and relations (Ladyman SEP). Van Fraassen describes this position as “radical structuralism” (van Fraassen 280) and appeals to science’s use of mathematical formulas to serve as motivation for this view (van Fraassen 304). Since physics uses math to describe how the physical world operates, and complex bodies of organic machinery operate based on the rules of physics, objects found in nature can theoretically be explained in mathematical terms. Although our conceptions of these entities may change dramatically over time, their mathematical descriptions and relations tend to expand as new discoveries are incorporated into existing theories (van Fraassen 305).

Although the discussion surrounding structural realism originally aimed to answer problems in the philosophy of science, OSR eventually became its own metaphysical view. This is partly due to discoveries made in physics over the previous century, shaping how we think about the natural world, especially in the subatomic domain (Ladyman SEP). At one point, an atom was thought to be the smallest unit of matter in the universe, with its original Greek word atomos meaning ‘indivisible’ (merriam-webster.com). Today however, we are able to run tests which not only divide atoms, but smash them together in order to inspect the pieces which make up the subatomic particles themselves. Furthermore, as our understanding of quantum physics grows, the less neat-and-tidy the world seems to be packaged.

My goal here is not to convince you to fully adopt the OSR perspective, but to consider it as a tool for drafting instances of artificial general intelligence and artificial consciousness. Personally, I find this approach to understanding reality very interesting and am compelled by the notion of “structures all the way down.” However, some may find this problematic as the relata seem to be missing. What is the ‘stuff’ that the structure is made out of? What exactly is being organized in a structural way? In a nutshell, segments of miniature structures. An example of this is the relationship between chemical bonds and the physical laws which binds them together. Or a Swiss army knife made of small metal parts, where these pieces consist entirely of a combination of metal atoms arranged into shapes. But these atoms themselves are just structures of subatomic particles, consisting of protons, neutrons, and electrons. Moreover, if we continue to zoom in, it turns out that these particles are just comprised of other particles arranged in different relations. Each contemporary understanding of “matter” and “mass” changes based on technological improvements, assisting in the production and interpretation scientific experiments. It may turn out that OSR becomes a useful perspective for conceptualizing our universe, especially as old assumptions are reworked to include new and possibly contradictory empirical discoveries.

Speaking of perception, if there are no objects, then does everything exist in the mind like Berekely thought? I think the answer to this is a little ‘yes’ and a little ‘no’: the brain creates representations of concepts which are based on learned regularities from interacting within an environment. From an evolutionary perspective, the brain adapted to help promote an individual’s survival by learning to recognize patterns and recall previous events. As neuronal structures developed to support new and more complex functions, the individual’s subjective awareness of their abilities grew as well, eventually producing mental concepts and linguistic labels. For example, neurons in the primary auditory cortex are arranged tonotopically, where particular cells responding to specific frequencies are arranged by pitch from low to high (Romani, Williamson, and Kaufman 1339). In this sense then, a C major scale played on a piano can be viewed as isomorphic to the way these frequencies are physically realized in the brain. Rather than the world or universe containing ‘a C major scale’, from an OSR perspective, reality only contains the laws which govern how sound pressures and vibrations must exist and operate such that a musical scale can be realized in a human brain. From an individual’s perspective however, this type of stimuli is represented as ‘music’ or ‘the sound of a piano’.

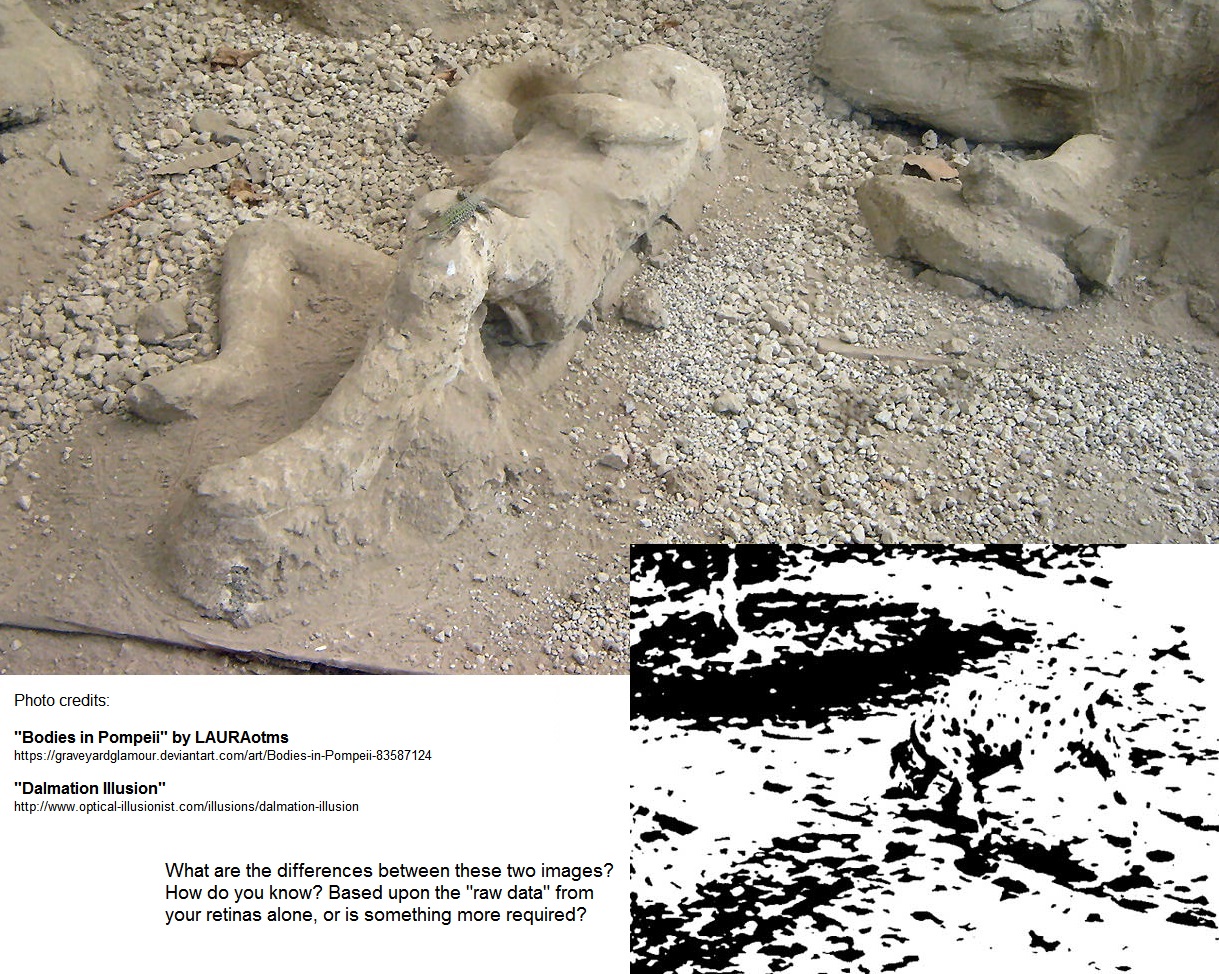

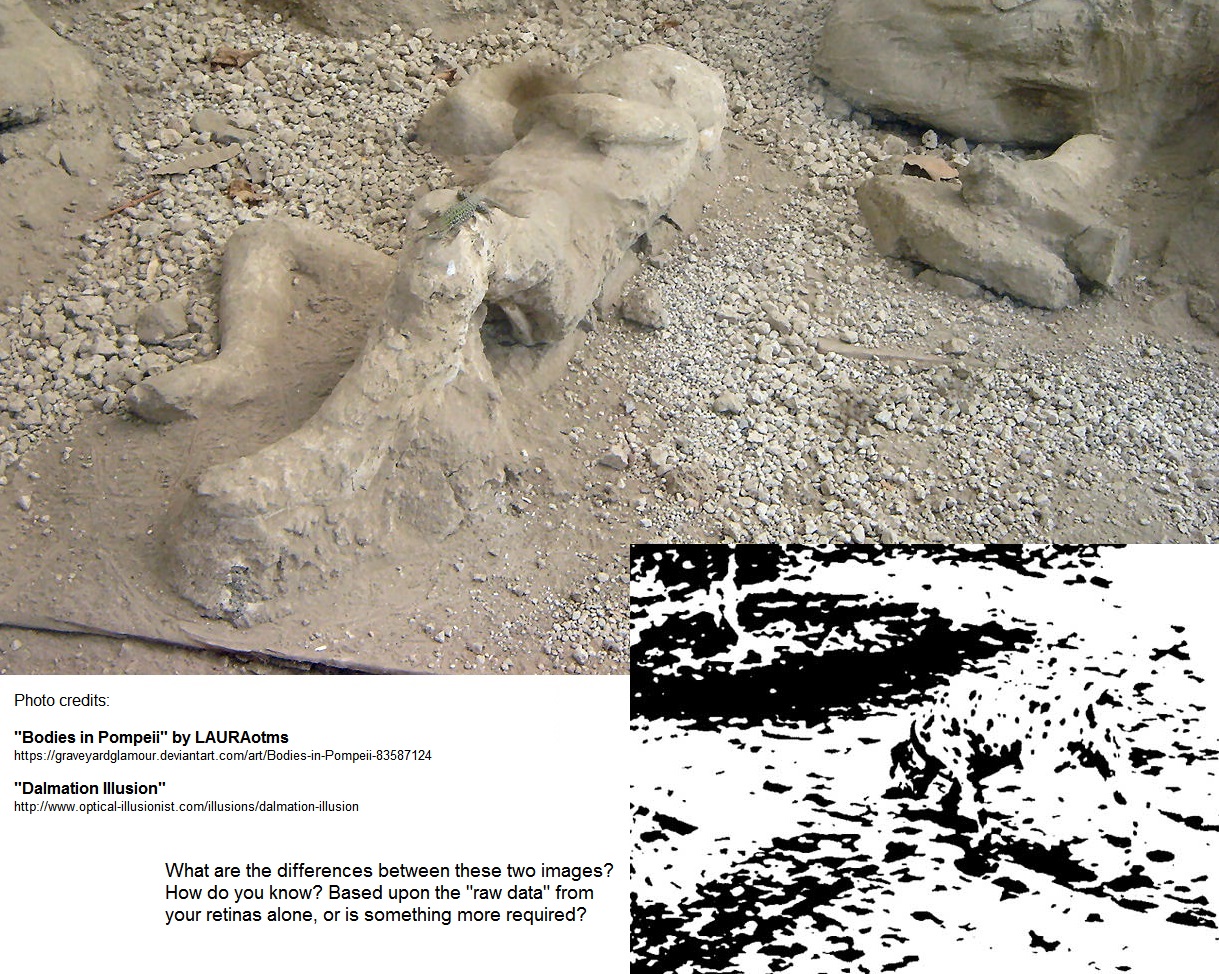

An unpublished article by Brian Cantwell Smith called Non-Conceptual World discusses a similar perspective to structural realism, claiming that concepts only exist in the mind (Cantwell Smith 8). One example Cantwell Smith mentions is the aftermath of the volcanic eruption which occurred in ancient Pompeii (see photo below). While he uses this as an example of how object recognition is an intentional process (Cantwell Smith 16), I was reminded of an optical illusion which shares important similarities to depictions of Pompeii. The Dalmation Illusion (see photo below) is just a series of black and white dots, yet somehow the brain manages to pick out the form of a dog. In reality however, there is no dog and the image is deemed an illusion. However, the image of the Pompeii disaster indicates that there really is a person amidst the variety of grey blotches. In both instances, the incoming visual information seems to be quite similar at first, but the stored contextual information associated with the visual stimuli creates a contrast in the conceptual representation of each “object”. I tend to agree with Cantwell Smith when he says “there aren’t any objects out there” (8) because an evolutionary perspective suggests the brain generated object-based concepts, not the universe.

If this is so, what can be said about human consciousness? Natural selection is the process of increasing genetic diversity through reproduction, as well as constraining it through environmental pressures, producing a mechanism for building and refining chemical and physiological structures. It seems likely that psychological structures are also subject to this same type of development, or at least impacted by it. Random genetic mutations may produce functional changes which impact neighbouring structures, requiring other neurons to update their internal and external organization as a result. If this change produces a large enough effect, a small patch of cortex or connected regions may also be impacted, leading the individual to notice alterations in their motor or perceptual abilities. By viewing the brain as a structure of networks and configurations, it can be suggested that consciousness may have emerged as a result of one or more self-organizing structures interacting within both internal and external environments over time.

Therefore, consciousness is an emergent property of the brain, but there may be more to this story, as will be discussed in future articles. Briefly though, consciousness, or some version of intelligent self-awareness, may be a direct result of the self-organizing system which constitutes evolution. Furthermore, could consciousness be an inevitable outcome of an instance of natural selection? Is there a threshold in the variables which makes this outcome necessity to occur, like an action potential in a neuron? I am also looking forward to discussing Dynamical Systems Theory and Information Theory as they relate to these ideas as well.

The reason OSR is important for designing artificial minds is because of its power to generate isomorphic versions of laws from the natural world. If an object or entity can be conceptualized as a series of structures with functional regularities, machine code and mathematical systems may be able to generate models which produce similar behaviours or results. Since neural networks and deep learning have found success in perceptual recognition, perhaps it will be beneficial for taking a developmental approach to artificial consciousness as well.

Edited version:

Works Cited

“Atom.” Merriam-Webster.com. Merriam-Webster, n.d. Web. 26 May 2018.

Blauw, Laura. Bodies in Pompeii. 2008, digital photograph. https://lauraotms.deviantart.com/art/Bodies-in-Pompeii-83587124

Ladyman, James, “Structural Realism”, The Stanford Encyclopedia of Philosophy (Winter 2016 Edition), Edward N. Zalta (ed.), URL = <https://plato.stanford.edu/archives/win2016/entries/structural-realism/>.

Romani, Gian Luca, Samuel J. Williamson, and Lloyd Kaufman. “Tonotopic organization of the human auditory cortex.” Science 216.4552 (1982): 1339-1340.

Van Fraassen, Bas C. “Structure: Its shadow and substance.” The British Journal for the Philosophy of Science 57.2 (2006): 275-307.

Worrall, John. “Structural realism: The best of both worlds?.” Dialectica 43.1‐2 (1989): 99-124.